TL;DR

We propose a novel 3D GAN training method for improving synthesis quality of side-view images, only with the pose-imbalanced in-the-wild dataset (e.g., FFHQ, CelebAHQ and AFHQ).

Abstract

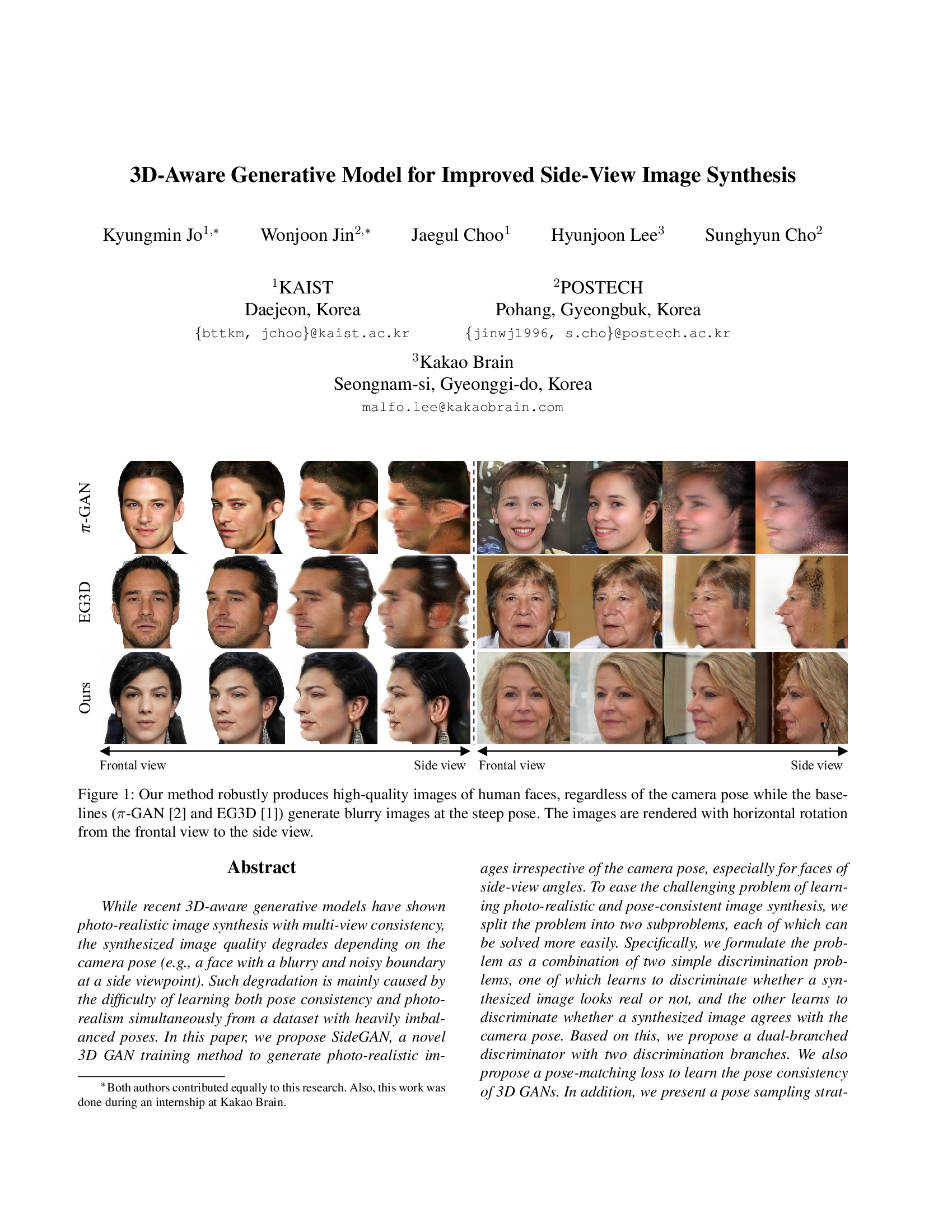

While recent 3D-aware generative models have shown photo-realistic image synthesis with multi-view consistency, the synthesized image quality degrades depending on the camera pose (e.g., a face with a blurry and noisy boundary at a side viewpoint). Such degradation is mainly caused by the difficulty of learning both pose consistency and photo-realism simultaneously from a dataset with heavily imbalanced poses. In this paper, we propose SideGAN, a novel 3D GAN training method to generate photo-realistic images irrespective of the camera pose, especially for faces of side-view angles. To ease the challenging problem of learning photo-realistic and pose-consistent image synthesis, we split the problem into two subproblems, each of which can be solved more easily. Specifically, we formulate the problem as a combination of two simple discrimination problems, one of which learns to discriminate whether a synthesized image looks real or not, and the other learns to discriminate whether a synthesized image agrees with the camera pose. Based on this, we propose a dual-branched discriminator with two discrimination branches. We also propose a pose-matching loss to learn the pose consistency of 3D GANs. In addition, we present a pose sampling strategy to increase learning opportunities for steep angles in a pose-imbalanced dataset. With extensive validation, we demonstrate that our approach enables 3D GANs to generate high-quality geometries and photo-realistic images irrespective of the camera pose.

Links

Video Results

The following videos show synthesis results of SideGAN and baseline methods on diverse datasets.

FFHQ

CelebAHQ

More rendering examples

Citation

@InProceedings{Jo_2023_ICCV,

author = {Jo, Kyungmin and Jin, Wonjoon and Choo, Jaegul and Lee, Hyunjoon and Cho, Sunghyun},

title = {3D-Aware Generative Model for Improved Side-View Image Synthesis},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {22862-22872}

}

Acknowledgement

The website template is borrowd from EG3D.